DeepFreak: Learning Crystallography Diffraction Patterns with Automated Machine Learning

A detailed description can be found in this paper.

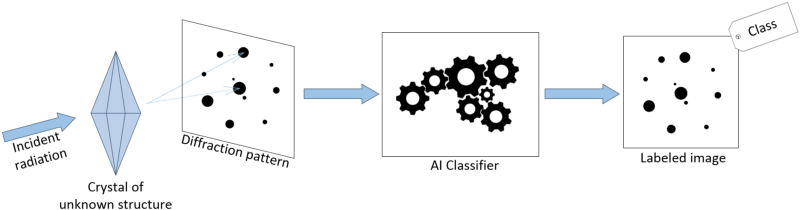

Crystallography is the science that studies the properties of crystals. It has been a central tool in many disciplines, including chemistry, geology, biology, materials science, metallurgy, and physics, and has led to substantial advances in, for instance, drugs development for fighting diseases. In crystallography, a crystal is irradiated with an X-ray beam that strikes the crystal and produces an image with a diffraction pattern (Figure 1, see this video for more information on crystal diffraction). This diffraction pattern is then used to analyze the crystal’s structure. Serial Crystallography, in turn, refers to a more recent crystallography technique for investigating properties from microcrystals using X-ray free-electron laser.

Recent technological advances have automated crystallography experiments, allowing researchers to generate diffraction images at unprecedented speeds. However, no automated system currently exists to provide real-time analysis of the diffraction images produced, so scientists that have been specifically trained to understand these diffraction patterns have to screen the images manually. This process is not only error-prone but also has the effect of slowing down the overall discovery process.

In this blog, we describe our work on the automation of serial crystallography image screening. To this end, we have developed:

- a new serial crystallography dataset called DiffraNet that can be used for machine learning training, validation, and testing;

- several automated classification approaches, including a novel end-to-end Convolutional Neural Network (CNN) topology and smartly crafted combination of feature extractors, Random Forests (RF), and Support Vector Machines (SVM).

All the material is publicly available. Our models have been fine-tuned using AutoML and achieve up to 98.5% and 94.51% accuracy on synthetic images and real images, respectively.

The DiffraNet Dataset

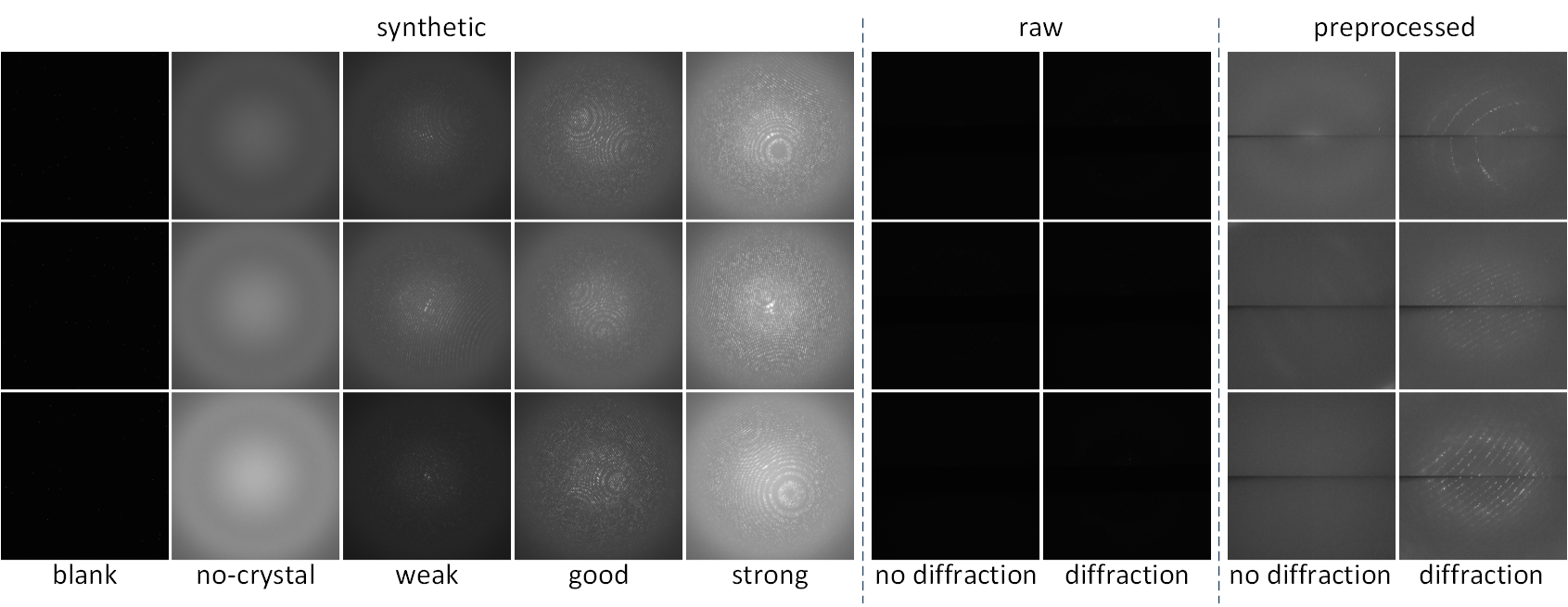

DiffraNet is a dataset comprised of both real and synthetic diffraction images, see Figure 2. We have generated DiffraNet in order to train and evaluate crystallography diffraction image classifiers. However, generating and accurately labeling real diffraction images is an expensive and error-prone process. Thus, we devised a simulation procedure to generate accurately labeled synthetic diffraction images. Our procedure relies on the nanoBragg simulator, a state-of-the-art tool for simulating diffraction patterns under different experimental setups. Besides, the simulation procedure was designed in collaboration with crystallography experts from the SLAC National Accelerator Laboratory and researchers from Stanford and UFMG.

We have generated 25,000 synthetic diffraction images for DiffraNet and labeled them as blank, no-crystal, weak, good, or strong. These classes denote possible outcomes from crystallography experiments. The first two classes denote images with no diffraction patterns (an undesired outcome) and the other three denote images with varying degrees of diffraction. Our main goal in DiffraNet is to distinguish between images with and without diffraction patterns so that images without diffraction pattern can be discarded and downstream analysis can focus on images that are the most promising.

DiffraNet also comprises 457 real diffraction images. Compared to the synthetic images, real images are notably darker and noisier, besides, real images include a horizontal shadow across the middle that blocks part of the diffracted beams. We preprocessed these images to remove the horizontal shadow and make the patterns more visible. The real images were manually labeled by an expert as diffraction or no-diffraction, since our main goal is to distinguish between those two classes.

Image Diffraction Classification

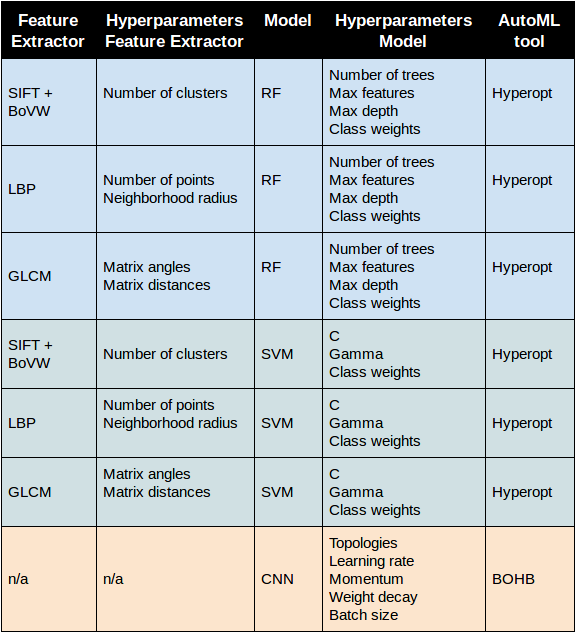

We explored several computer vision approaches for classification on DiffraNet, see Table 1. Our approaches include the combination of standard computer vision feature extractors (SIFT + BoVW, LBP, and GLCM) with RF and SVM classifiers and our novel CNN diffraction architecture. Our CNN is dubbed DeepFreak, a playful portmanteau of deep learning and diffract with a small twist.

We used automated machine learning (AutoML) tools to fine-tune all of our models and feature extractors for diffraction image classification. These tools automatically search the space of possible feature extractors/models/hyperparameters and find the best configuration for DiffraNet. We use Hyperopt to search for feature extractors and classifier hyperparameters jointly. Similarly, we use BOHB (a combination of Bayesian Optimization and HyperBand) to simultaneously search for the best topology and hyperparameters for DeepFreak. Both tools are based on Bayesian Optimization and Tree Parzen Estimator (TPE) models. Table 1 summarizes which hyperparameters were automatically tuned and which tool we used for each approach.

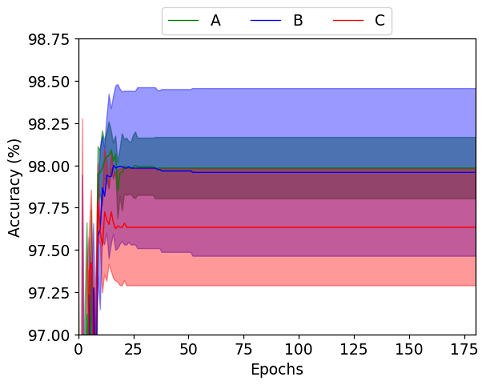

After fine-tuning, we analyze the variability of the DeepFreak configurations found by our AutoML approach. We have run the three best configurations found by BOHB five times each and plot the mean learning curve (thin line) and the 80% confidence interval (shade) for each configuration (dubbed A, B, and C, for simplicity), see Figure 3. Among the three, A and B have the highest mean accuracy and B has the broadest confidence interval. We choose B due to its highest validation accuracy for the rest of the results.

Classification Results

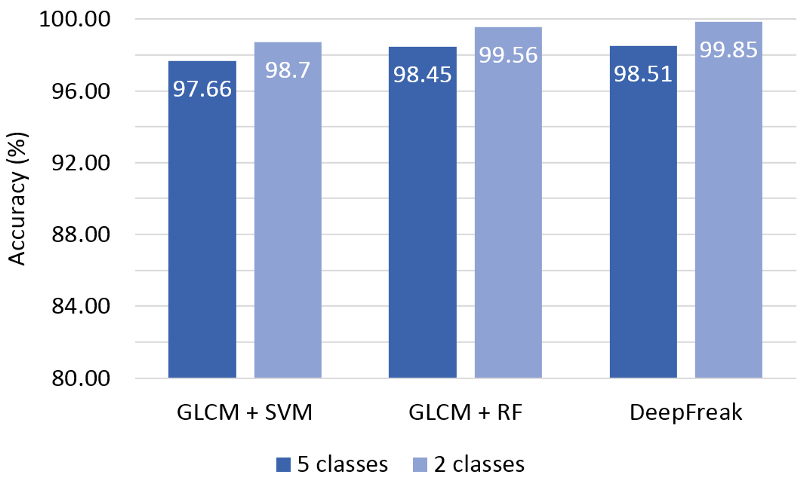

We first evaluate the best configuration for our classifiers over DiffraNet’s synthetic dataset. Figure 4 shows the accuracy of our models considering all five DiffraNet classes (dark blue) and only diffraction/no-diffraction classes (light blue).

DeepFreak achieved the highest accuracy in both cases, but we note all of our models hit over 97.5% and 98.5% accuracy on the five-class and binary settings, respectively.

Besides, we note DeepFreak achieved near perfect accuracy in the binary setting. This means DeepFreak can differentiate between synthetic images with and without diffraction patterns almost perfectly.

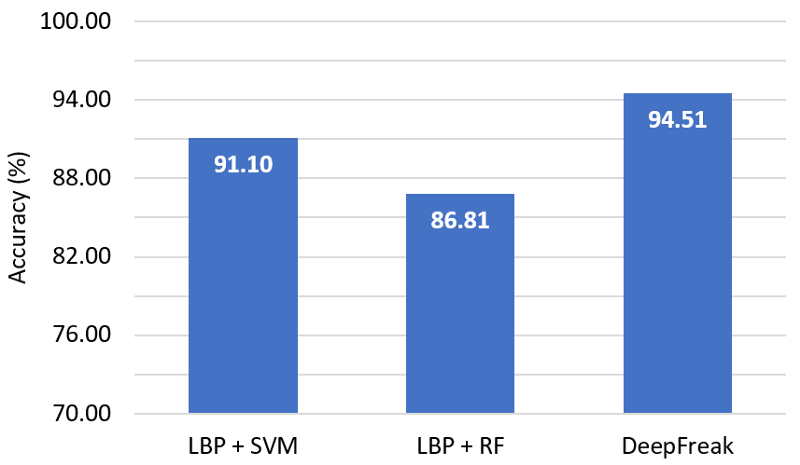

We also evaluate our models on DiffraNet’s real dataset. There is a noticeable difference between our synthetic and real images. Due to this “reality gap”, models trained on synthetic data often have degraded performance when tested on real data. To minimize this gap, we repeat our AutoML optimization, this time, we optimize for performance on a validation set of real images. Figure 5 shows the accuracy of our models after this optimization.

We note that our models hit up to 94.51% accuracy on real images. The high accuracy achieved shows that our synthetic dataset can be effectively used to train models for real diffraction image classification.

Summary

We have summarized our work on the automation of serial crystallography diffraction image classification. We developed a dataset, DiffraNet, with both synthetic and real images and explored computer vision and machine learning approaches for classification. Our models are publicly available and have been fine-tuned using AutoML tools. Results show that our methods are effective on diffraction image classification, even when facing the “reality gap”.

We believe that DiffraNet and our AI will have a positive impact in Serial Crystallography, accelerating basic science research and its discoveries in disciplines such as chemistry, geology, biology, materials science, metallurgy, and physics.