NoScope: 1000x Faster Deep Learning Queries over Video

Video data is exploding – the UK alone has over 4 million CCTVs, and users upload over 300 hours of video to YouTube every minute. Recent advances in deep learning enable automated analysis of this growing amount of video data, allowing us to query for objects of interest, detect unusual and abnormal events, and sift through lifetimes of video that no human would ever want to watch. However, these deep learning methods are extremely computationally expensive: state-of-the-art methods for object detection run at 10-80 frames per second on a state-of-the-art NVIDIA P100 GPU. This is fine for one video, but it is untenable for real deployments at scale; to put these computational overheads in context, it would cost over $5 billion USD in hardware alone to analyze all the CCTVs in the UK in real time.

To address this enormous gap between our ability to acquire video and the cost to analyze it, we’ve built a system called NoScope, which is able to process video feeds thousands of times faster compared to current methods. Our key insight is that video is highly redundant, containing a large amount of temporal locality (i.e., similarity in time) and spatial locality (i.e., similarity in appearance in a scene). To harness this locality, we designed NoScope from the ground up for the task of efficiently processing video streams. Employing a range of video-specific optimizations that exploit video locality dramatically reduces NoScope’s amount of computation over each frame—while still retaining high accuracy for common queries.

In this post, we’ll walk through an example of each of NoScope’s optimizations and describe how NoScope stacks them end-to-end in a model cascade to obtain multiplicative speedups—up to 1000x faster for binary detection tasks on real-world webcam feeds.

A Prototypical Example from Taipei

Imagine we wanted to query the below webcam feed to determine when buses pass by a given intersection in Taipei (e.g., for traffic analysis):

How could we answer this query with today’s best-of-class visual models? We could run an object detection convolutional neural network (CNN) such as YOLOv2 or Faster R-CNN to detect buses by running the CNN over every frame of the video:

This approach works reasonably well—especially if we smooth the labels occuring in the videos—so what’s the problem? These models are expensive. These models run at 10-80 frames per second, which is fine if we’re monitoring a single video feed, but it’s not fine for thousands of video feeds.

Opportunity: Locality in Video

To do better, we can look at properties of the video feed itself. Specifically, video content is highly redundant. Let’s go return to our street corner video feed from Taipei and look at a few buses:

The buses look similar from the perspective of this video feed; we call this form of locality scene-specific locality, because, within the video feed, the objects of interest don’t look very different from one another (e.g., compared to a camera from another angle).

Moreover, in our Taipei street corner, it’s easy to see that not much changes on a per-frame basis, even though the bus is moving:

We call this temporal locality, because nearby frames look similar and contain similar contents.

NoScope: Exploiting Locality

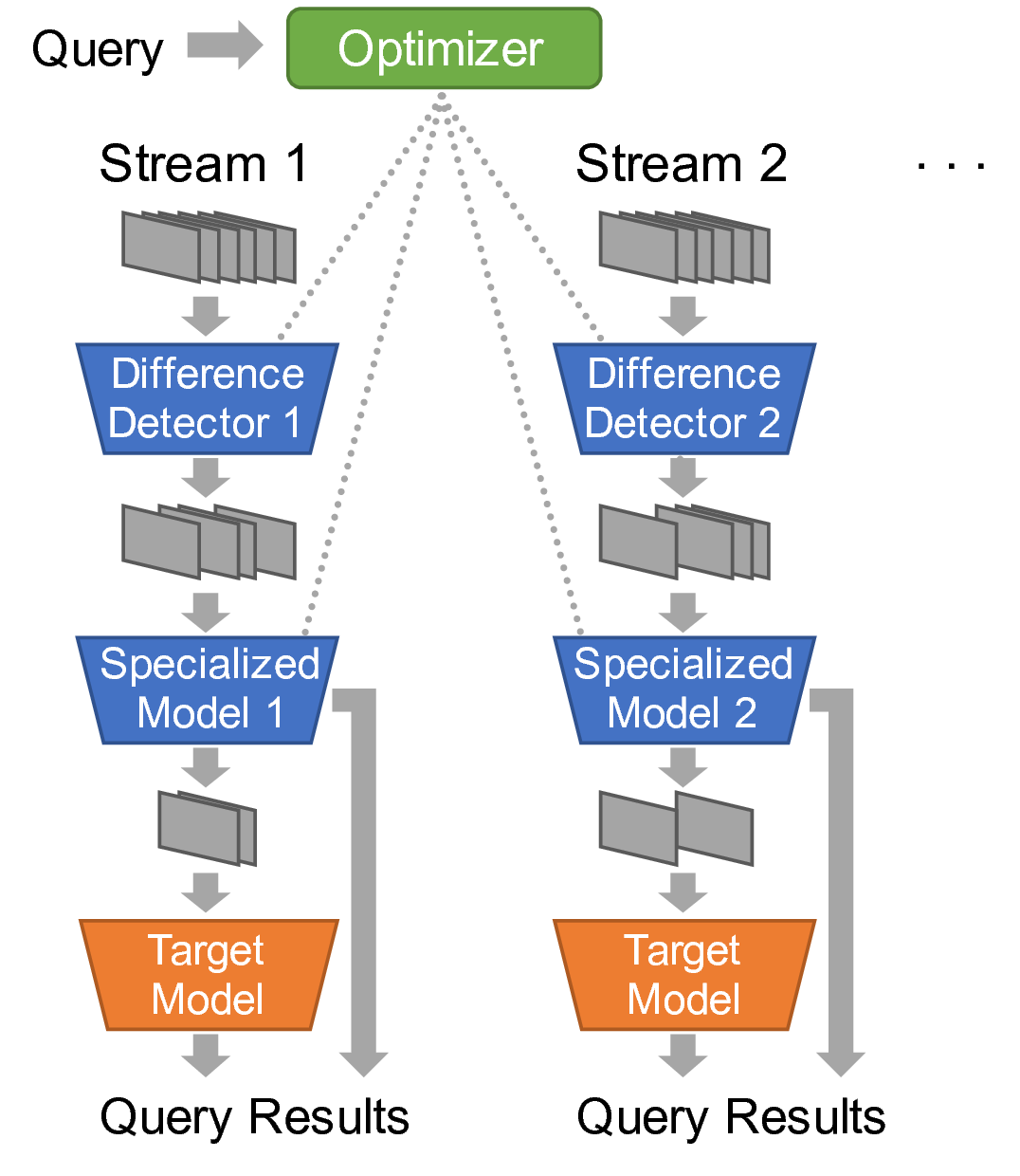

To capitalize on the above observations, we’ve been building a new query engine called NoScope, which can dramatically speed up video analytics queries. Given a video feed (or set of feeds) to query, an object (or objects) of interest to detect (e.g., “find frames with buses in the Taipei feed”), and a target CNN (e.g., YoloV2), NoScope outputs frames where the object appears according to YoloV2. However, NoScope is much faster than the input CNN: instead of simply running the expensive target CNN, NoScope learns a series of cheaper models that exploit locality, and, whenever possible, runs these cheaper models instead. Below, we describe two type of cheaper models: models that are specialized to a given feed and object (to exploit scene-specific locality) and models that detect differences (to exploit temporal locality locality). Stacked end-to-end, these models are 100-1000x faster at binary detection than the original CNN.

Exploiting Scene-specific Locality with Specialized Models

NoScope exploits scene-specific locality using specialized models, or fast models that are trained to detect objects of interest from the perspective of a specific video feed. Today’s CNNs can recognize a huge number of objects, including cats, skis, and toilets. However, if we want to detect buses in Taipei, we don’t care about cats, skis, or toilets; instead, we can train a model that only detects buses from the perspective of the Taipei camera.

To illustrate, the following images are real examples from the MS-COCO dataset and are examples of objects we don’t care about detecting.

NoScope’s specialized models are also CNNs, but they are much less complex (i.e., shallower) and much faster than generic object detection CNNs. How does this help? NoScope’s specialized models can run at over 15,000 frames per second compared to YOLOv2’s 80 frames per second. We can use these models as a surrogate for the original CNN.

Exploiting Temporal Locality with Difference Detectors

NoScope exploits temporal locality using difference detectors, or fast models that are designed to detect object changes. In many videos, the labels (e.g., “bus” or “no bus”) change much slower than the frames change (e.g., a bus is in frame for 5 seconds, but the video runs at 30 frames per second). To illustrate, the following videos are each 150 frames of video, but the label doesn’t change in either!

In contrast, today’s object detection models run on a frame-by-frame basis, independent of the actual changes between frames. The reason for this design decision is that models like YoloV2 are trained on static images and therefore treat videos as a sequence of images! Because NoScope has access to a particular video stream, it can train difference detection models that are aware of temporal dependencies. NoScope’s difference detectors are currently implemented using logistic regression models computed over frame-by-frame differences. These detectors run incredibly fast—over 100K frames per second on the CPU. Like the specialized models, NoScope can run these difference detectors instead of calling the expensive CNN.

Putting it all together

NoScope combines specialized models and difference detectors by stacking them in a cascade, or sequence of models that short-circuits computation. If the difference detector is confident that nothing has changed, NoScope drops the frame; otherwise, if the specialized model is confident in its label, NoScope outputs the label. And, for particularly tricky frames, NoScope can always fall back to the full CNN.

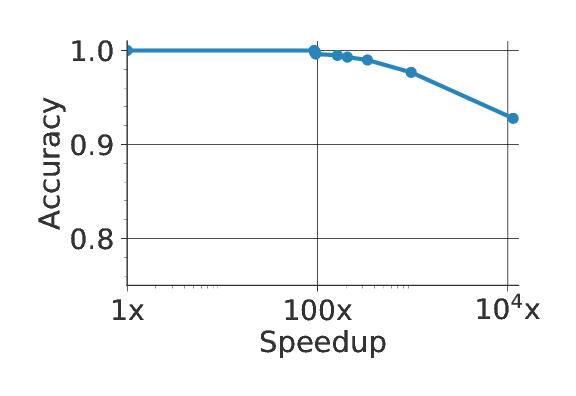

To configure this cascade and determine the confidence levels for each model, NoScope provides an optimizer that can smoothly trade off between accuracy and speed. Want faster execution? NoScope will pass fewer frames through the end-to-end cascade. Want more accurate results? NoScope will increase the threshold for short-circuiting a classification decision. As the plot below illustrates, the end result provides speedups of up to 10,000x over current methods.

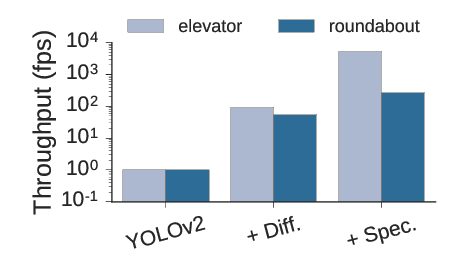

Both the difference detector and the specialized models contribute to these results; we performed a factor analysis where we started by using just YoloV2 and subsequently added each type of fast model to the cascade. Both are necessary for maximum performance:

To summarize NoScope’s cascading strategy, the optimizer first runs the slow reference model (YOLOv2, Faster R-CNN, etc.) over a given video stream to obtain gold-standard labels. Given these labels, NoScope trains a set of specialized models and difference detectors and uses a a holdout set to choose which specialized model and difference detector to use. Finally, NoScope’s optimizer cascades the trained models, with the option of calling the slow model when uncertain.

Conclusions

In conclusion, video data is remarkably rich in content but incredibly slow to query using modern neural networks. In NoScope, we exploit temporal locality to accelerate binary detection CNN queries over video by over 1000x, combining difference detection and specialized CNNs in a video-specific pipeline. The resulting pipelines run at over 8000 frames per second. We are continuing to improve NoScope to support multi-class classification, non-fixed angle cameras, and more complex queries. You can find all the details in our paper on arXiv and also try them out yourself.