Finding Errors in Perception Data With Learned Observation Assertions

Machine learning (ML) is increasingly being deployed in mission-critical settings, where errors can have disastrous consequences: autonomous vehicles have already been involved in fatal accidents. As a result, auditing ML deployments is becoming increasingly important.

An emerging body of work has shown the importance of one aspect of the ML deployment pipeline: training data. Much work in ML assumes that provided labels are ground truth and measure performance against this data. For example, benchmarks on domains ranging from autonomous vehicles to NLP question-answering track metrics against “ground truth” labels.

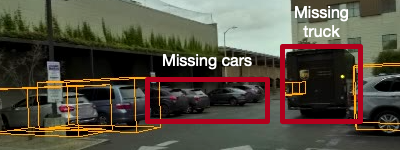

Unfortunately, many datasets are rife with errors! Below, we show errors from a publicly available autonomous vehicle dataset, which has been used to host competitions for models to be used in live autonomous vehicles. In fact, over 70% of the validation scenes contain at least one missing object box! Importantly, this dataset was generated by a leading labeling vendor that has produced labels for many autonomous vehicles companies, including Waymo, Uber, Cruise, and Lyft.

In this blog post, we’ll describe a new abstraction (learned observation assertions, LOA) and system (Fixy) for finding errors in perception data. Our paper on LOA is forthcoming in SIGMOD 2022 (preprint available here) and our code is open-sourced for general use. This work was done in conjunction with the Toyota Research Institute.

Learned Observation Assertions

Overview

LOA is an abstraction designed to find errors in ML deployment pipelines with as little manual specification of error types as possible. LOA achieves this be allowing users to specify features over ML pipelines. Given these features, LOA will automatically surface potential errors. We implemented LOA in a prototype system, Fixy.

Fixy has two stages: an offline phase (to learn which data points are likely errors) and an online phase (to rank new potential errors).

In the offline phase, Fixy learns distributions over features, which will subsequently be used in the online phase. As inputs, Fixy takes previously human-labeled data (which can be erroneous!) and features.

In the online phase, Fixy will ingest new data and automatically surface potential errors. Fixy only needs the new data as input in this phase.

Since many ML deployments are continuous, we have access to previously human-labeled data. Furthermore, we have access to existing ML models. Fixy uses these two “organizational resources” (i.e., resources already present in ML deployments) to learn how to rank errors.

We’re omitting how Fixy works under the hood, but please see our full paper for details!

Example: finding missing labels

To understand how to use Fixy, let’s consider the example of finding boxes missed by a human labeler from 3D LIDAR point cloud / camera data. We showed several examples of such errors above.

In this example, we have new, human-labeled data and wish to find missing boxes from these labels. To do we, we provide these labels (which we will find errors in) and predictions from a 3D object detection model over this data (which we will use to find errors).

We can specify the following features over the data: box volume, object velocity, and a feature that selects only model-predicted boxes that don’t overlap with a human label. These features are computed deterministically with short code snippets from the human labels and ML model predictions. Fixy will then execute on the new data and produce a rank-ordered list of possible errors.

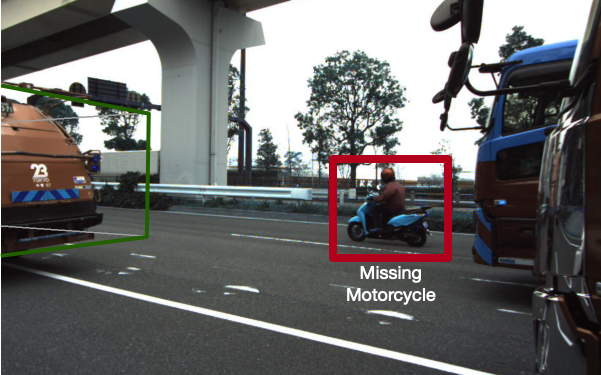

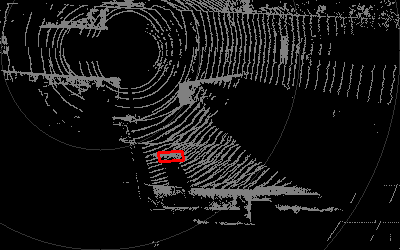

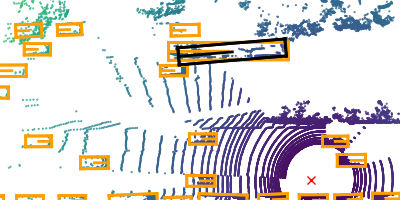

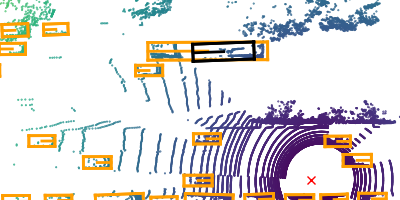

As an example, we’re showing how Fixy can find a missing motorcycle:

Example: finding erroneous model predictions

As another example, let’s use Fixy to find erroneous model predictions. Here, we’re executing the model on fresh data that doesn’t have human labels and we want to find boxes where the model is likely making errors.

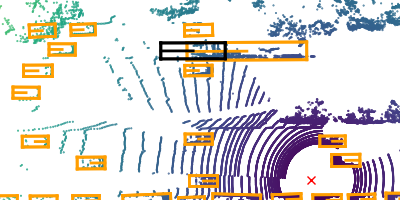

As before, we can specify the features of box volume and object velocity. However, we won’t have the overlap feature since there aren’t human labels in this example. As before, Fixy will execute on the new data and produce a rank-ordered list of possible errors. As we show below, Fixy can aggregate information across time to decide which predictions are likely to be errors.

As an example of inconsistent predictions that Fixy can find, we’re showing the ground truth predictions of a truck in orange and model predictions in black:

Using LOA

Now that we’ve seen what LOA can do, how can we use it? An analyst might first specify a speed prior over transitions. To do so, they could write the following code:

class SpeedTransitionPrior(KDEScalarPrior):

def get_val(self, bundle1, bundle2):

xyz1 = bundle1[0].data_box.center

xyz2 = bundle2[0].data_box.center

dist = np.linalg.norm(xyz1 - xyz2)

time_diff = bundle1[0].ts - bundle2[0].ts

return bundle1[0].cls, dist / time_diff # Return the class and speed

The analyst could also specify a prior over object volumes. As before, this can be done with a few lines of code:

class VolumeObsPrior(KDEScalarPrior):

def get_val(self, observation):

wlh = observation.data_box.wlh

return observation.cls, wlh[0] * wlh[1] * wlh[2]

Finally, the analyst would need to specify if they are looking for likely observations (as in the case of finding missing labels) or unlikely observations (as in the case of finding erroneous ML model predictions). After that, LOA will do the rest! You can see our code for more examples.

Evaluating LOA

We evaluated LOA on two real-world autonomous vehicle datasets: the Lyft Level 5 perception dataset and an internal dataset developed at the Toyota Research Institute (TRI). Both datasets were generated by a leading labeling vendor that has produced labels for many autonomous vehicle companies. We used a publicly available model with the Lyft Level 5 dataset and an internal model with the TRI dataset.

The most critical errors to find objects entirely missed by human annotators. Shockingly, we found that 70% of the scenes in the Lyft validation dataset contained at least one missing object! Also surprisingly, LOA was also able to find errors in every single validation scene that had an error, which shows the utility of using a tool like LOA. We show several examples of such errors below:

We also took a scene from the TRI internal dataset that failed a manual validation check and applied LOA to it. LOA was able to find 75% of the total errors in this scene (18 out of 24 errors)!

Finally, we also show that LOA has high precision when finding errors in our full paper: on the Lyft dataset, LOA has a precision of 100% to 76% in the top 1 and top 10 ranked potential errors.

Conclusions

As we’ve shown, datasets used in mission-critical settings are rife with errors! This can affect model quality, which can in turn lead to safety issues.

We’ve designed LOA to help find such errors and we’ve shown that it can find a wide range of errors on autonomous vehicle datasets. Our code is publicly available here and our preprint is here, with a forthcoming publication in SIGMOD 2022!